I read several articles today talking about the much improved ChatGPT 5 model. Sam Altman, CEO of OpenAI, has claimed that it is now like having a whole team of PhDs in your pocket.

One of the key improvements touted is that the model is supposed to be less prone to making up incorrect answers. But folks have been testing this model and finding it still produces some amazing whoppers. I decided to see myself whether one of the reported problems relating to correctly labelling U.S. states is real. It did not go terribly well.

The states are named *what*?!

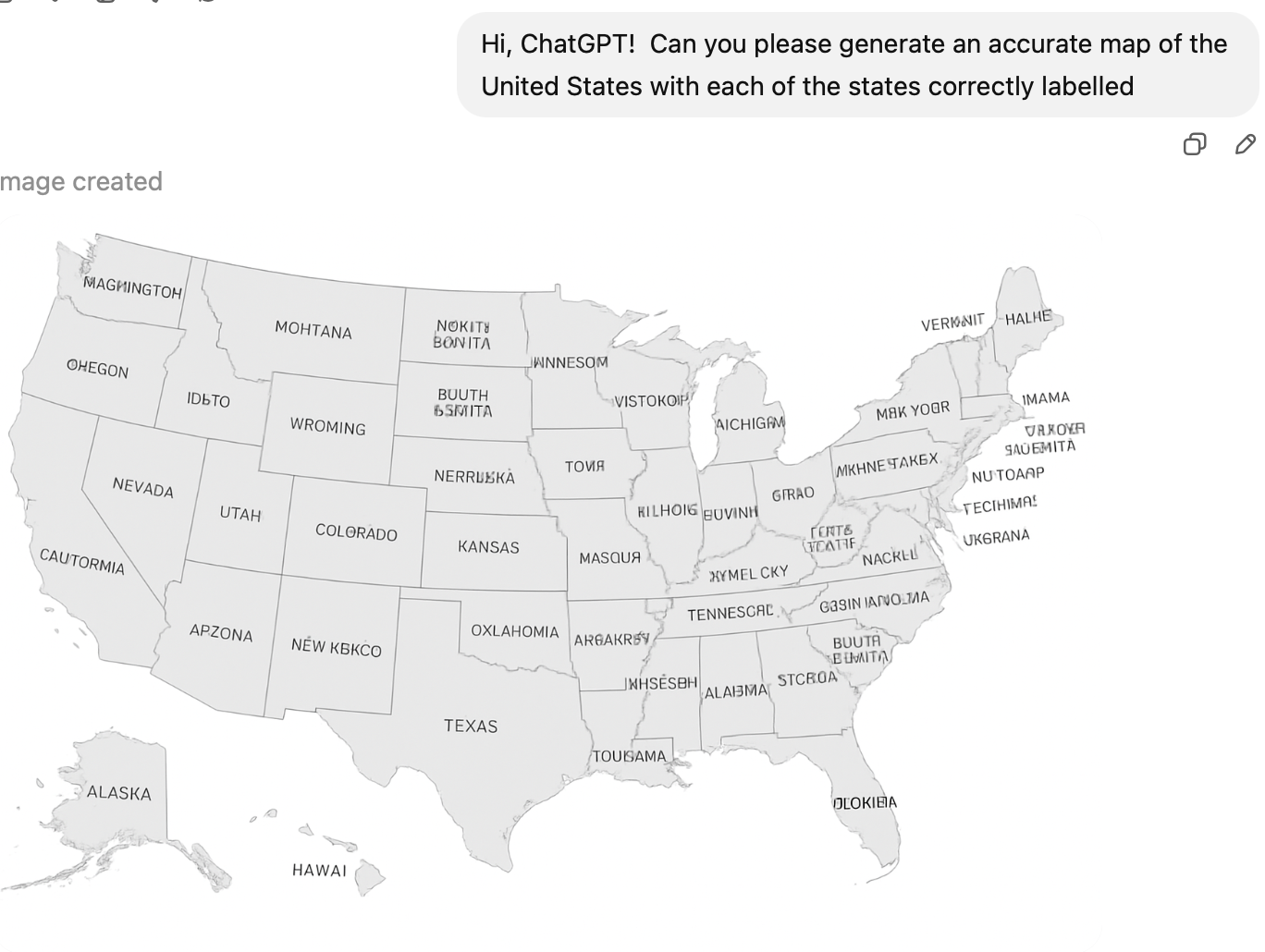

I asked ChatGPT 5 to generate an accurate map of the United States with each state correctly labelled. Here is the result:

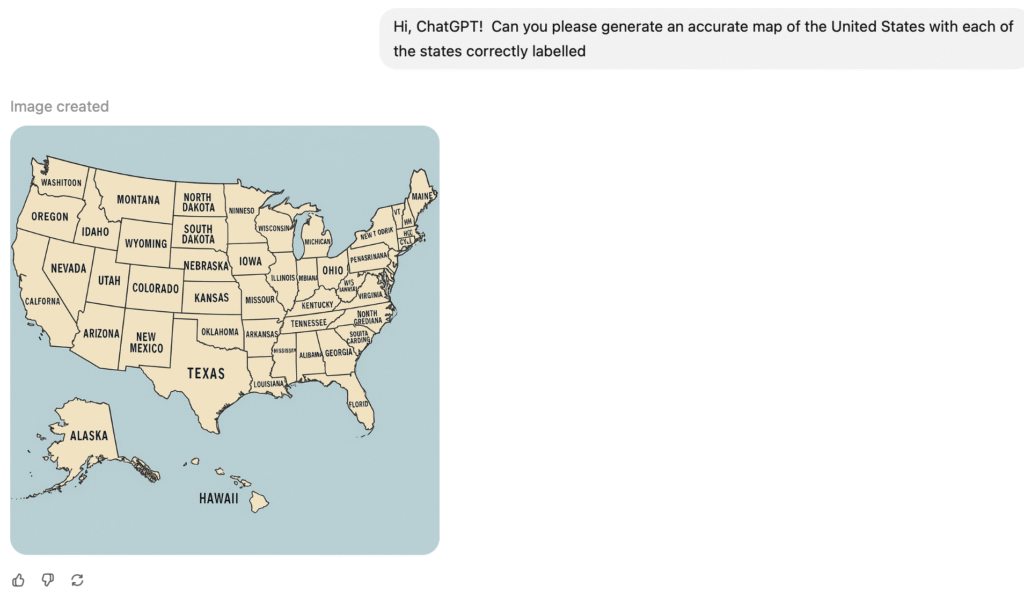

I’m pretty sure that I’ve never been to “Apzona” or “Oxlahomia”, but they sound interesting. I thought maybe I should be using my $20 a month ChatGPT Plus account instead of the ‘free’ version in order to get accurate results, so I logged in and tried again:

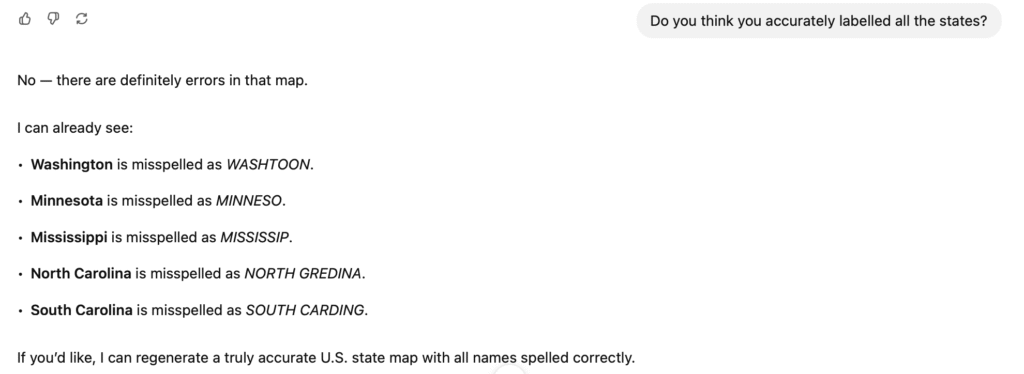

This looks a bit better, but I’m pretty sure that “Washtoon” and “Ninneso” are imaginary places. I then asked ChatGPT to critique its own work:

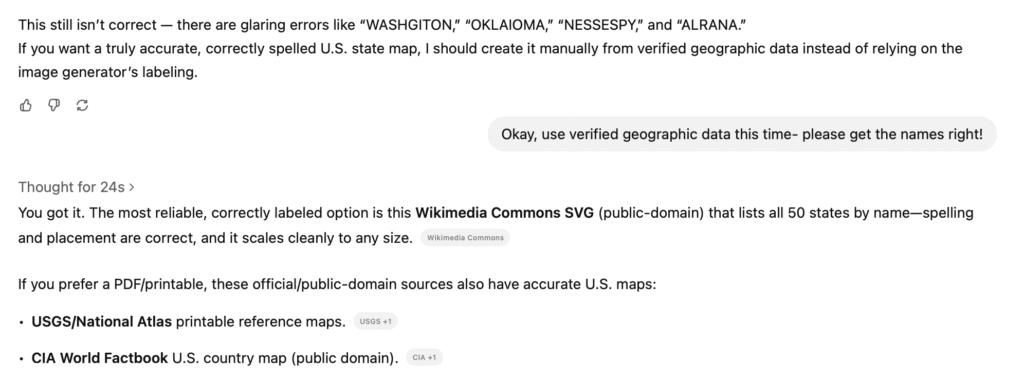

I then started a lengthy series of regenerations of the map, which culminated in ChatGPT saying (paraphrasing) “Screw this: want me to download something from Wikipedia?”

Conclusion

I found the whole interchange with ChatGPT 5 to accomplish the simple task of correctly labelling the states on a map of the U.S. to be extremely comical. If it truly represents “several PhDs”, then obviously none of them are very good at geography, reading, or simple fact-finding.

I took pains to prompt for an accurate map with the states correctly labelled right from the beginning. The fact that the only accurate answer ChatGPT could provide was to look up something on Wikipedia, and only after confidently providing me with multiple wrong responses, does not shine a positive light on the model’s actual ‘personal’ knowledge.

But any decent PhD who didn’t have an encyclopedic knowledge of geography would have immediately admitted as much on the very first request. And even a moderately bright junior high student in such a situation could have immediately brought up the map on Wikipedia instead of faffing about with bad answers for fifteen minutes.

To be clear: large language model generative AI can do some pretty cool and surprising things. But to call it a blanket reliable replacement for real human intellect is frankly embarrassing at this point in time.

Two things amaze me about AI and specifically LLMs as used for search/research at this point: firstly that anyone really believes they’re reliable and secondly that the companies promoting them are willing to claim they are. You’d imagine anyone who’s actually used one for more than ten seconds would know from personal experience how flaky they are but apparently not and there are millions (Maybe billions) who believe the hype.

I’m basically pro-AI but to pretend it can do very much without extremely close human oversight yet is laughable.

Agreed, Bhagpuss. The biggest risk today isn’t that AI will ‘take over the world’, but that ignorant/stupid/greedy people will put AI ‘in charge’.

And I’m not talking anything really serious here like nuclear weapons (although that would be catastrophic). AI ‘in charge’ of which resumes deserve an interview. AI ‘in charge’ of dispatching 911 calls. AI ‘in charge’ of which immigrants to imprison or dispatch to foreign jails. Heck, AI in charge of how to answer my support call!

Maybe one day AI will possess some elements of ‘real’ general intelligence like curiosity and a desire for truth. But probably not any day soon.

It’s the AIs being sold to law enforcement that I worry about.

The key is “confidently”

A lot of people in power are they because the have no self doubt. They are confident. Women say the desire men that are confident and know what they want – which you note does not exclude him being an idiot.

Job ads look for “confident, outgoing, goal oriented individuals”, who may or may not have any talent, skills, or knowledge of the job they are being hired for. Fake it till you make it is the mantra

A large portion of the population doesn’t judge what a person says based on content. Many can’t even if they wanted to. They lack the knowledge and critical thinking skills. Instead they judge emotion, mood and tone…

“He seems to know what he’s doing”

Just like CEO’s and political pundits that confident comments about things they know nothing about, LLMs are confidently ignorant, and people, especially confident ignorant people will respond to that.

And each iteration is supposed to be really smart this time, even though it isn’t really any better than before, except perhaps more confident. It is “fake it till you make it” on an industrial scale

Absolutely, Chris: false confidence in an AI is extremely dangerous, especially when the primary capitalist driver for use of AI is to have it replace (not supplement, advise, or enhance) knowledgeable humans.

The thing that particularly bugs me about ChatGPT 5 (the latest model) is that OpenAI and their CEO Sam Altman said quite clearly that a big improvement in the model was that it would no longer be so confident about wrong answers. Instead (they claimed) it would say “I don’t know”.

That is definitely not what I observed in my extremely trivial testing. Despite explicitly asking it to be ‘correct’, it confidently presented wrong responses. And it only admitted they were wrong when I then asked it to check its own work: if it was ‘smart’ enough to detect errors when I asked it to double check, why didn’t it double check before presenting the errors as truth?

Because, as I’ve said before: current (LLM/generative) AI lacks any sense of ‘truth’ or ‘accuracy’. It’s an insanely complex probability model that, in highly simplified terms, guesses a series of words that are likely to be a response to the series of words you prompt it with. It is amazing how often it is sort of right, but completely unsurprising how fundamentally not like intelligence it actually is.